Hi from Berlin,

For our team of journalists from different countries, who are writing a European newsletter, the use of Artificial Intelligence for translation has long been part of our daily routine. But with the rapid acceleration of the AI revolution, new fears and questions have arisen, and certainly not only for us.

Will computers take our jobs? Will Europe once again be outmanoeuvred by tech giants in the US and China, as we’ve seen before with the rise of social networks? How can you tell if an AI is wrong, biased or discriminatory? Will people be divided into those who control technology and those who are at its mercy? And how can societies retain control of AI – or is this a lost cause, and do we then even need to regain it?

This debate is about power, resources and who defines the truth.

In this edition, we would like to tackle some of these questions.

Enjoy reading!

Judith Fiebelkorn, this week’s Editor-in-Chief

Indrek Seppo is a data scientist and AI expert at the University of Tartu.

European Focus: Technology entrepreneurs published a joint statement a few months ago, suggesting putting the development of AI systems on hold for six months. What do you think of this idea?

Indrek Seppo: I don’t think it would be technically feasible. In addition, I have no faith that we will be able to figure out in six months how to continue. Even if artificial intelligence develops consciousness – and it will inevitably happen in my opinion – we do not know today how it will behave, or how it will be used.

Should countries prepare legislation or another institutional framework for AI to regulate its increasingly frequent use?

Yes, our laws definitely need to change because of AI. But the problem is that nobody knows how. Regulation just for the sake of it would not help anyone and might only worsen the situation.

A simple example: We have millions of graphics cards in people’s hands. How do you regulate what a teenager does in his bedroom? Good luck!

What are the most common misconceptions about AI?

Artificial intelligence is not omnipotent. It will be smart, it will probably have consciousness, but it will not be omnipotent. Taking over the world will also be a serious challenge for AI because superhuman intellect is not enough.

I also see a misconception that AI is somehow just a statistical machine that imitates intellect. If something looks like a duck, quacks like a duck, functions like a duck, then it is a duck!

Many people are concerned that AI wants to reproduce, and to take over the world. That could happen, but it’s by no means certain. Our human desires evolved long before our minds did. Maybe in a few years our best psychiatrists will investigate why artificial intelligence keeps wanting to switch itself off.

Will there ever be a European alternative to ChatGPT? AI development requires a huge processing capacity to train Large Language Models — but this is an expensive resource that major European universities and companies do not have.

According to a study by the German AI Association, it would take 350 million euros to set up such a data centre. That is the equivalent of building 50 km of motorway. The association founded an initiative together with nine European countries to develop a project.

However, it is questionable whether the German government will co-finance the project. The budget has been under discussion for months.

Last March the Italian data protection authority (DPA) temporarily blocked OpenAI’s ChatGPT in Italy. The authority criticised OpenAI for not describing how it trains its algorithms and not offering users the possibility of deleting or correcting inaccurate data. Open AI has since made some modifications, and Italy has allowed use of the software again, but doubts remain.

OpenAI has stated the AI is trained using “information that is publicly available on the internet”. This means that the AI “crawls” the web to train the algorithm. Such activity is problematic under the European General Data Protection Regulation (GDPR), especially when it takes into account criminal activity.

For example, a malevolent actor could use ChatGPT to access a public blogpost of mine, where I write about LGBTQ+ unions, to create a database of LGBTQ+ individuals which could be up for sale to a reactionary government.

OpenAI also mentions another source: “information that we license from third parties”. It is not clear who such “third parties” are and how they collect data. GDPR prohibits the use of illegally formed databases.

The second issue concerns the rights of the data subject. The DPA demanded ChatGPT allow the removal and rectification of data, on request of the data subject. The company has made some changes, but has declared that it cannot guarantee the rectification of data due to technical reasons.

This is an issue that is not unique to ChatGPT as these technologies are increasingly being used in other areas, such as recruitment. For example, if you were applying for a job, a recruiter could stop your prospects of winning a position due to inaccurate information about you in an illegally created database.

These are some of the unanswered questions that require a clear position from national governments and European institutions.

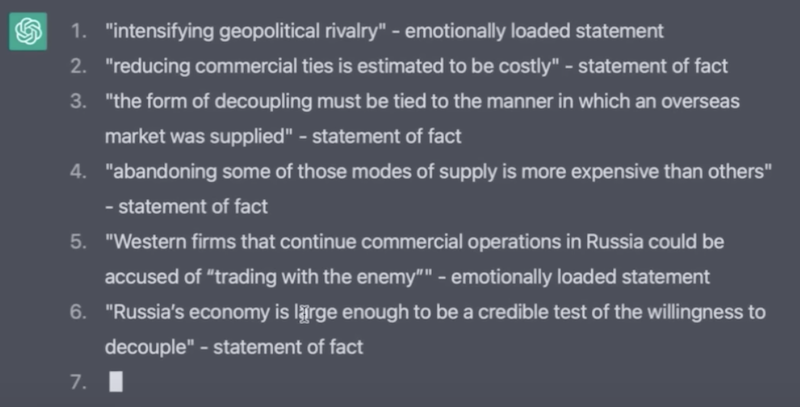

“Are there any emotional sentences, loaded statements, rhetorical devices in the given text? Please identify, tokenise and list all of them.”

The text in the picture above is an answer to the prompt given to ChatGPT by the President of the Kyiv School of Economics Tymofiy Mylovanov in a masterclass dedicated to using AI to detect and combat disinformation. The text in question was a study by the University of St. Gallen in Switzerland about Western businesses who remained in Russia after its 2022 invasion of Ukraine.

“Uncovering and busting disinformation is one of the significant challenges Ukraine is facing. If you choose the prompts carefully, ChatGPT can analyse massive amounts of information ― not as well as trained humans, but in just a few minutes,” says Mylovanov.

New AI tools have a positive image in Ukraine and are used in distance learning, business meetings, budget compiling and in warfare. On the other hand, officials and experts are calling for the development of a policy on ChatGPT usage. And there are some disappointments: when ChatGPT answers to users with narratives that echo Russian propaganda.

“The use of AI in illustration is unethical,” renowned comic artist and reader of El Confidencial, Mikel Janín, argued when stopping his subscription to our newspaper. It was not an isolated criticism, but a reflection of the discontent that many users expressed in reaction to an experiment we conducted last April, where we used an AI tool to design an illustration and tweeted about it.

I believe there are many lessons to be learned from this episode. A newspaper such as El Confidencial gains and loses readers every day, but losing Mikel hit me particularly hard, and I have written a letter, urging him to come back:

Dear Mikel,

Please accept my apologies. The experiment was limited. The image was clearly labelled as AI-generated, and it was explained in a Twitter thread. However, communication with the audience is crucial. Undoubtedly, the Tweet was not enough to convey our message. As a subscriber, you deserve an explanation.

I’ve seen major media outlets decline by failing to accept — or outright refusing — technological changes. We cannot expect a cutting-edge newspaper to refrain from experimenting with such innovations. The arrival of AI in the media is unstoppable, and those who do not adapt will perish.

The illustration experiment was not carried out as a substitute for the work of a professional. It was conducted by our team of designers, who have been experimenting with the most groundbreaking technology in decades. The formats, infographics and special features they created (many of them award-winning) testify to the value we place on graphic art.

The best way to approach the discussion about AI is not to deny it exists. Subscribers such as yourself can make their voices heard in our newsroom and scrutinise the ethical use of any technology. I invite you and everyone else who feels disappointed not to abandon us over a misstep. Please come back and engage in the debate with us. After all, we are also afraid of the implications of AI.

Thanks for reading the 31st edition of European Focus,

People say that the best way to cope with fears is to go all in, to reflect and to talk about them.

What do you think? Do we have reason to be concerned about the use of AI? How should we deal with this in our editorial team?

Please join the debate and write feedback to info@europeanfocus.eu.

See you next Wednesday!

Judith Fiebelkorn